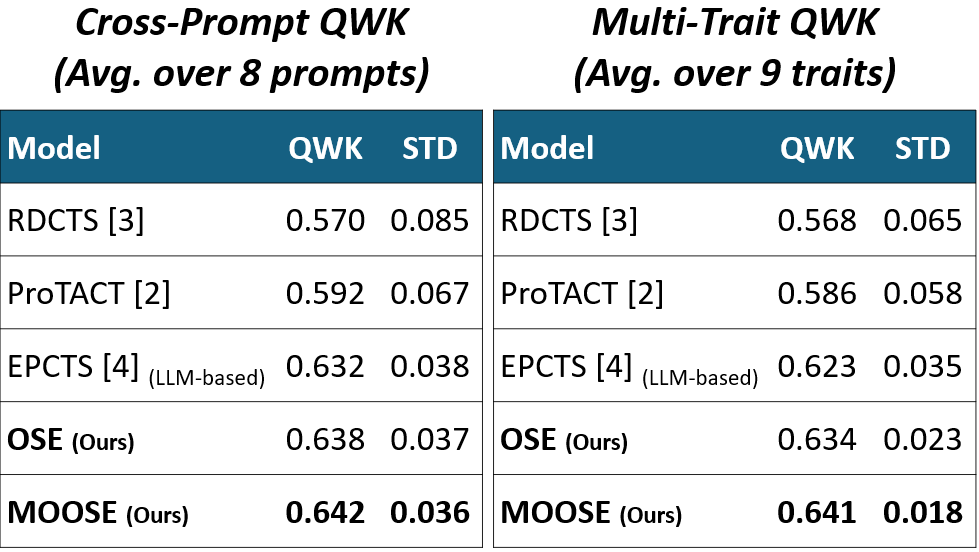

Mixture of Ordered Scoring Experts for Cross-prompt Essay Trait Scoring

MOOSE is multi traits cross prompt essay scoring model which imitates how human raters evaluate essays. MOOSE is composed of three experts:

1) Scoring Expert: Learn essay inherent scoring cues.

2) Ranking Expert: Compare relative quality across different essays.

3) Adherence Expert: Estimate the degree of prompt adherence.

It not only outperforms previos method and stables no matter in prompts and traits. The source code of the model is here and the online scoring engine is here

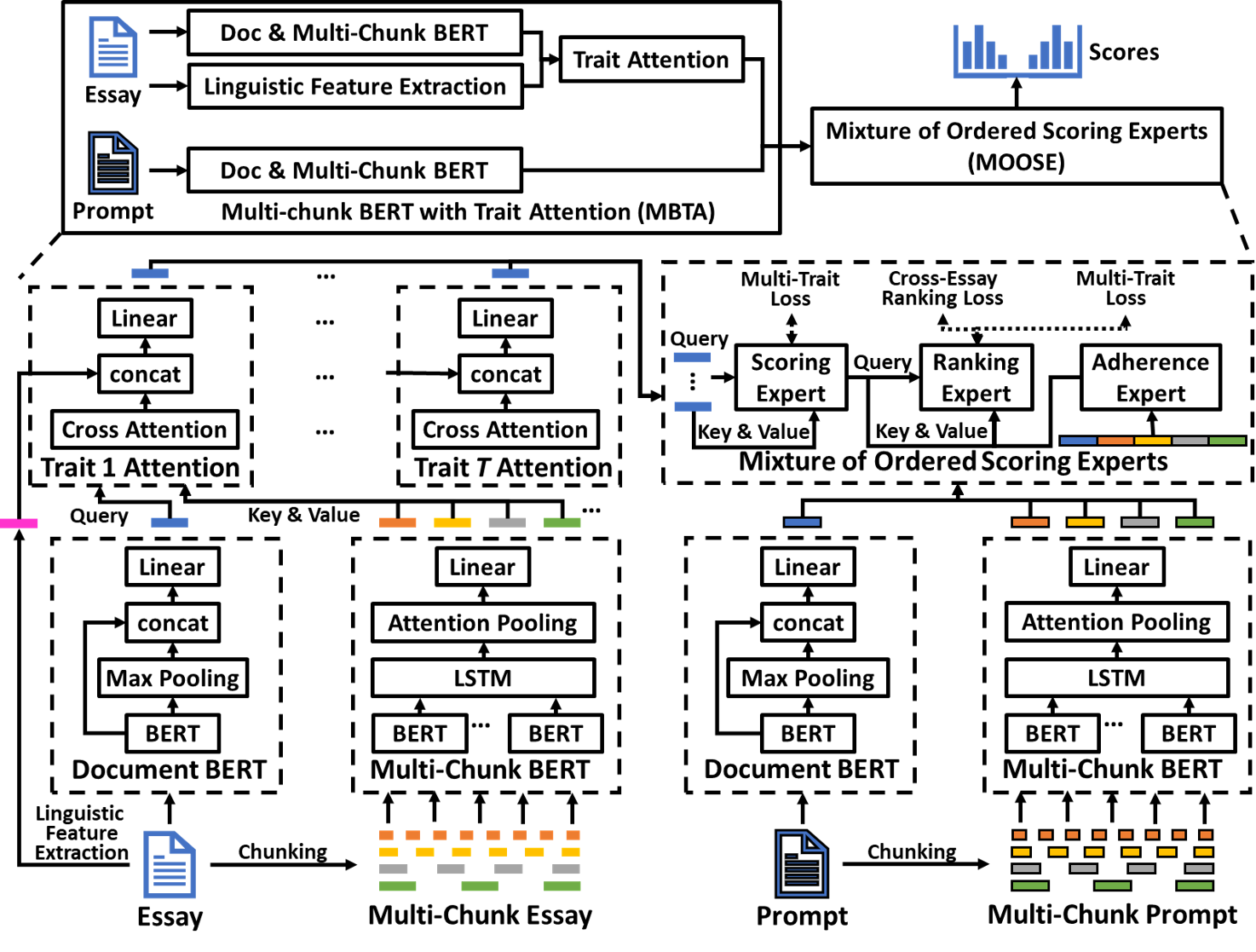

Model overview

MOOSE framework is the-state-of-the-art model on multi-trait cross prompt essay scoring. It consist of serveral mechanism:

Multi Chunk content feature extractor: Extract content features of the essay and prompt ,with multi-granularity, via document BERT and multi-chunk BERT models which is follow (Wang et al., 2022). The content features are composed of chuncks at different levels of granularity, enabling the model not only to analyze the essay as a whole, but also to capture the performance of different segments within the essay.

Multi-trait attention: adopt the architecture of trait attention mechanism proposed in ProTACT (Do et al.,2023) to learn trait-specific representations. Different from the ProTACT, we use the document BERT feature as the query and the multi-chunk BERT features as both key and value in the cross-attention layer to learn non-prompt specific representation of the essay.

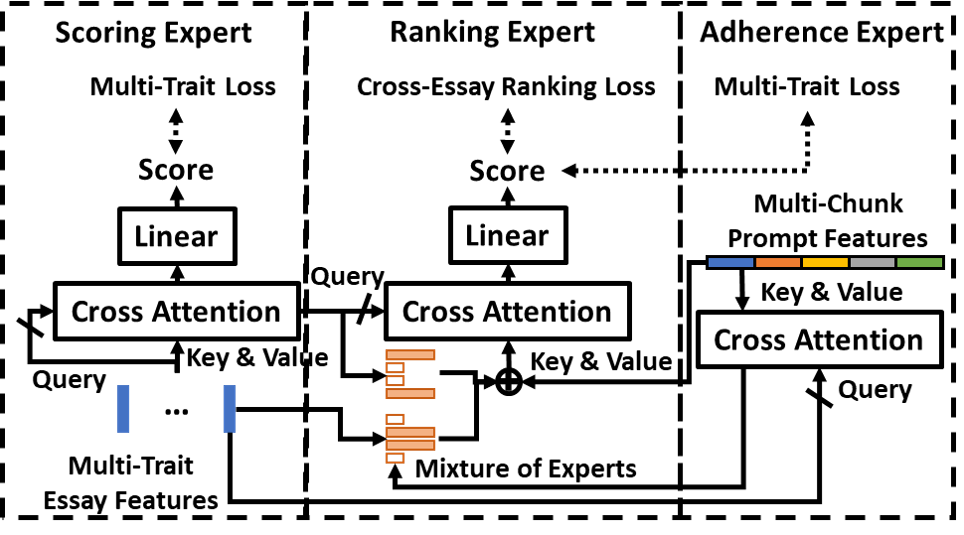

Mixture of Ordered Scoring Experts: a mechanism designed to mimic human expert reasoning through a three-stage Ordered Scoring Experts (OSE) framework: a scoring expert learns trait-specific signals from essay features, a ranking expert adaptively selects scoring cues via a Mixture-of-Experts (MoE) mechanism, and an adherence expert assesses the alignment between essay content and prompt. The model employs a dual-layer cross-attention decoder, with stop-gradient applied to queries to simulate scoring cue retrieval. Together, these components enable more accurate and robust essay trait evaluation.

Citation

@inproceedings{chen2025mixture,

title={Mixture of Ordered Scoring Experts for Cross-prompt Essay Trait Scoring},

author={Chen, Po-Kai and Tsai, Bo-Wei and Wei, Shao Kuan and Wang, Chien-Yao and Wang, Jia-Ching and Huang, Yi-Ting},

booktitle={Proceedings of the 63rd Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers)},

pages={18071--18084},

year={2025}

}